Emotional Robotics

Ravi Vaidyanathan/Suresh Devasahayam

It is now well-accepted that for a robot to interact effectively with humans, it should manifest some form of believable behaviour establishing appropriate social expectations and regulating interaction. We are working with the Bristol Robotics Laboraotory (BRL) to introduce a simplified robotic system capable of emotial (affective) human-robot communication without the complexity of full facial actuation, and to empirically assess human response to that robot for social interaction. In addressing these two issues, we provide a basis for the larger goal of developing a mechanically simple platform for deeper human-robot cooperation as well as a method to quantify, neurologically, human response to affective robots. The scope encompasses: 1) the development of hybrid-face humanoid robot capable of emotive response, including pupil dilation, and 2) the testing of hybrid-face through mapping human response to the robot qualitatively (interview/feedback) and quantitatively (EEG ERPs) based on human-robot testing. Principal contributions lie in modelling of emotion affect space and mapping neurological response to robot emotion.

Bristol-Elumotion Robotic Torso (BERT2) social robot with an expressive face meant to help researchers design intelligent systems capable of ensuring mutual trust, safety, and effective cooperation with human beings

Emotive robot having a conversation conveying its 'empathy'

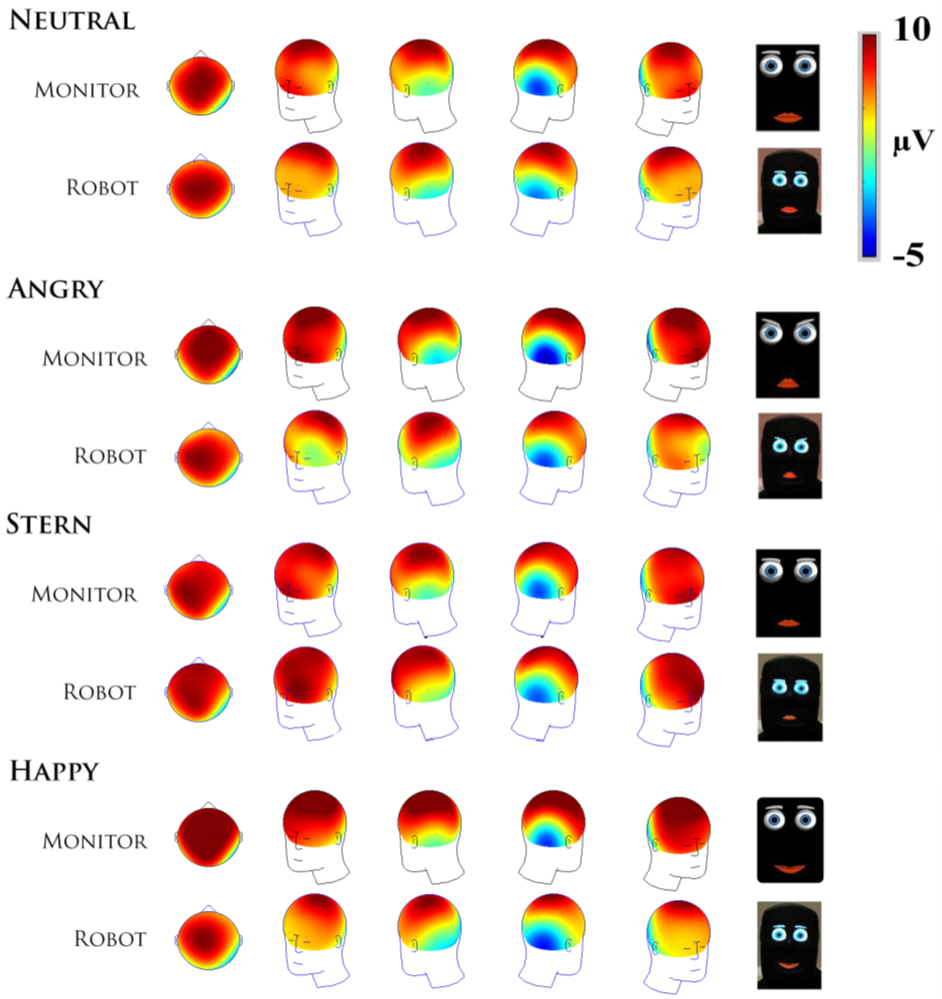

Brain Activity Map; The BERT2 robot made Neutral, Angry, Stern and Happy facial expressions at a human subject test group with concurrent brain activitry (EEG) recorded. The color map shows the parts of the brain that were most active when the person viewed the robot emotion. The spatial EEG topography of evoked negative N170 response to robot facial expressions demonstrates similar brain activity to that of recognizing the same human facial expressions

The Biomechatronics Laboratory is collaboraiton with Emotix on the companion social robot Miko to commercialize this work. Miko has been released to the public Asia, Europe, and the USA. See http://www.emotix.in/#2 for more information on this exciting product

Miko: Meet Miko, India's first companion robot, who will soon be avaialbe internationally

External Collaborators

Professor Suresh Devasahayam, Christian Medical College Vellore, Vellore, India

Professor Chris Melhuish, Dr Appolinaire Etoundi, Bristol Robotics Laboraotry, Bristol, UK

Professor Christopher James, University of Warwick, Coventry, UK

Emotix, Mumbai India

Sponsors

UK Dementia Research Institute (DRI)

UK Grand Challenge Research Fund (GCRF)

European Union Framework 7 Pesearch Program

Selected References

- CSM Castillo, S Wilson, R Vaidyanathan, SF Atashzar, “Wearable MMG-plus-One Armband: Evaluation of Normal Force on Mechanomyography (MMG)”, IEEE Transactions on Neural Systems and Rehabilitation Engineering, 11 pp, DOI: 10.1109/TNSRE.2020.3043368, 2021*

- M Lima, M Wairagkar, N Natarajan, S Vaitheswaran, R Vaidyanathan, “Robotic Telemedicine for Mental Health: A Multimodal Approach to Improve Human-Robot Engagement”, Frontiers in Robotics and Artificial Intelligence, DOI: 10.3389/frobt.2021.618866 accepted, 2021*

- S O H Madgwick, S Wilson, R Turk, J Burridge, C Kapatos, R Vaidyanathan, "An Extended Complementary Filter for Full-Body MARG Orientation Estimation," IEEE Transactions on Mechatronics, 25, 4, pp. 2054-2064, 2020*

- Russell, P. Kormushev, R. Vaidyanathan and P. Ellison, "The Impact of ACL Laxity on a Bicondylar Robotic Knee and Implications in Human Joint Biomechanics," IEEE Transactions on Biomedical Engineering, 67, 10, pp. 2817-2827, 2020*

- W Huo, P Angeles, S Wilson, Y F Tai, N Pavese, M Hu, S Wilson, R Vaidyanathan, "A Heterogeneous Sensing Suite for Multisymptom Quantification of Parkinson’s Disease," IEEE Transactions on Neural Systems and Rehabilitation Engineering, 28, 6, pp 1397-1406, 2020*

- M Gardner, S Mancello, S Wilson, B C Khoo, D Farina, E Burdet, S F Atheshzar, R Vaidyanathan, “A Multimodal Intention Detection Sensor Suite for Shared Autonomy of Robotic Prostheses”, Sensors, 20, 21, 6097, doi: 10.3390/s20216097, 2020*

- S Wilson, H Eberle, Y Hayashi, S OH Madgwick, A McGregor, XJ Jing, R Vaidyanathan, “Formulation of a New Gradient Descent MARG Orientation Algorithm: Case Study in Robot Teleoperation”, Mechanical Systems and Signal Processing, 130, 1, pp 183-200, 2019*

- RB Woodward, MJ Stokes, SJ Shefelbine, R Vaidyanathan, “Segmenting Mechanomyography Measures of Activity Phases Using Inertial Data”, Scientific Reports, 9, 1, 5561, 1-10, 2019*

- R B Woodward, S Shefelbine, R Vaidyanathan, “Pervasive Monitoring of Motion and Muscle Activation: Inertial and Mechanomyography Fusion”, IEEE Transactions on Mechatronics, 22,5, 2022-2033, 2017

- J Burridge, A Lee, R Turk, M Stokes, J Whitall, R Vaidyanathan, P Clatworthy, A M Hughes, C Meagher, E Franco, L Yardley, “Tele-health, wearable sensors and the Internet: Will they improve outcomes through increased intensity of therapy, motivation and adherence to rehabilitation programs?”, Journal of Neurologic Physical Therapy, 41, S32-S38, 2017

- K A Mamun, M Mace, M Lutman, J Stein, X Liu, T Aziz, R Vaidyanathan, S Wang, “Movement decoding using neural synchronisation and inter-hemispheric connectivity from deep brain local field potentials”, Journal of Neural Engineering, 12, 5, pp 1-18, 2015

- M Mace, K Mamun, L Gupta, S Wang, R Vaidyanathan, "A heterogeneous framework decoding of bioacoustic signals", Expert Systems with Applications, 40, 13, pp 5049-5060, 2013